Humans have firmly retained their lead over robots at table tennis for over 40 years, but recent advancements at Google DeepMind suggest our days of dominance may be numbered. As detailed in a preprint paper released on August 7, researchers have designed the first-ever robotic system capable of amateur human-level performance in ping pong—and there are videos to prove it.

Researchers often opt for classic games like chess and Go to test the strategic capabilities of artificial intelligence—but when it comes to combining strategy and real-time physicality, a longtime robotics’ industry standard is table tennis. Engineers have pitted machines against humans in countless rounds of ping pong for more than four decades due to the sport’s intense computational and physical requirements involving rapid adaptation to dynamic variables, complex motions, and visual coordination.

“The robot has to be good at low level skills, such as returning the ball, as well as high level skills, like strategizing and long-term planning to achieve a goal,” Google DeepMind explained in an announcement thread posted to X.

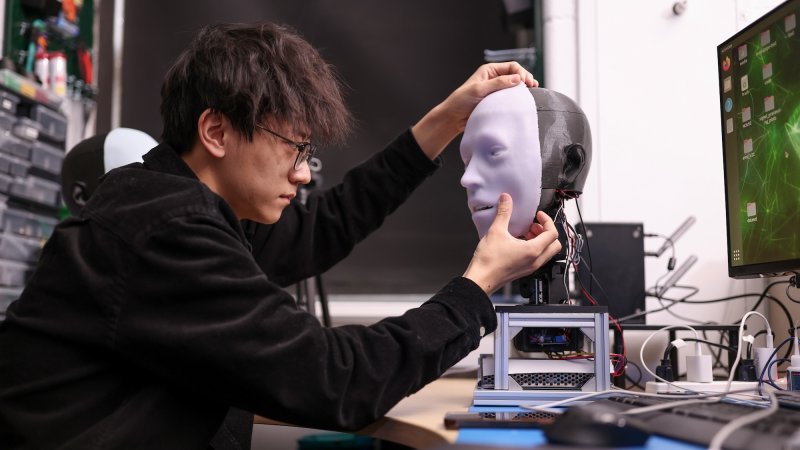

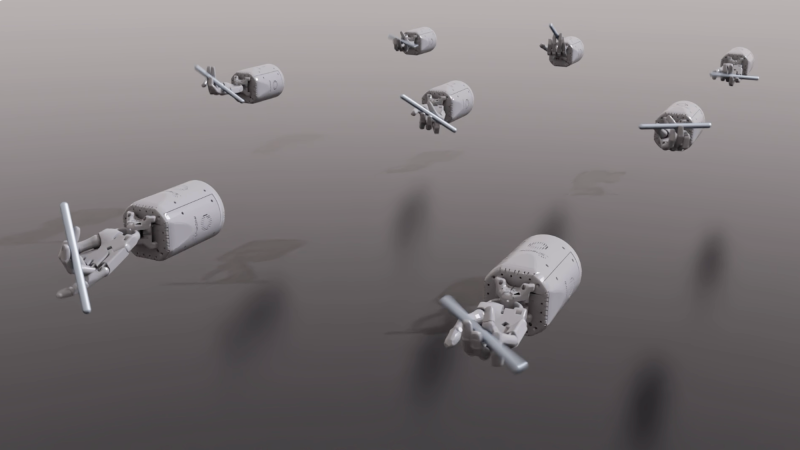

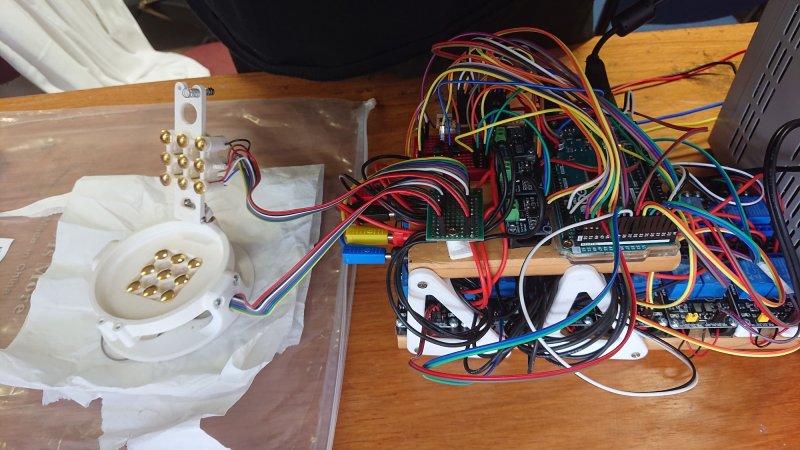

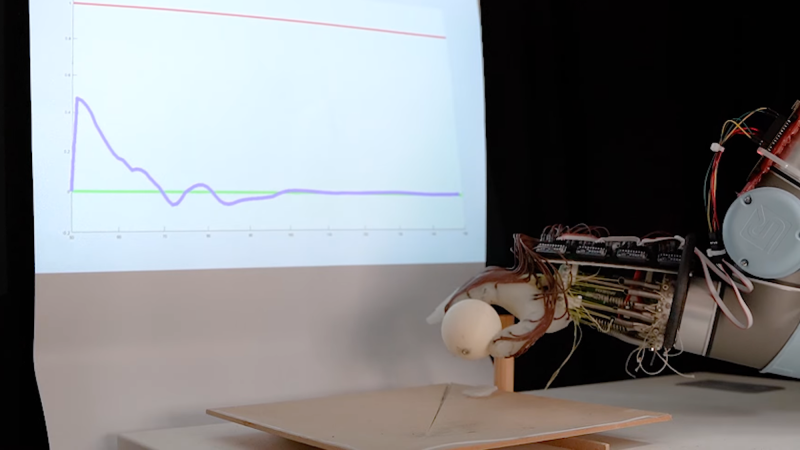

To develop their highly advanced bot, engineers first compiled a large dataset of “initial table tennis ball states” including information on positionality, spin, and speed. They then tasked their AI system to practice using this dataset in physically accurate virtual simulations to learn skills like returning serves, backhand aiming, and forehand topspin methods. From there, they paired the AI with a robotic arm capable of complex, quick movements and set it against human players. This data, including visual information of the ping pong balls captured by cameras onboard the bot, was then analyzed in simulations again to create a “continuous feedback loop” of learning.

[Related: This AI program could teach you to be better at chess.]

Then came the tournament. Google DeepMind enlisted 29 human players ranked across four skill levels—beginner, intermediate, advanced, and “advanced+”—and had them play against their track-mounted robotic arm. Of those, the machine won a total of 13 matches, or 45-percent of its challenges, to demonstrate a “solidly amateur human-level performance,” according to researchers.

Table tennis enthusiasts worried about losing their edge to robots can breathe a (possibly temporary) sigh of relief. While the machine system beat every beginner-level player, it only won 55-percent of its matches against intermediate competitors, and failed to win any against the two advanced-tier humans. Meanwhile, study participants described the overall experience as “fun” and “engaging,” regardless of whether or not they won their game. They also reportedly expressed an overwhelming interest in rematches with the robot.