Google’s annual I/O developer’s conference highlights all the innovative work the tech giant is doing to improve its large family of products and services. This year, the company emphasized that it is going big on artificial intelligence, especially generative AI. Expect to see more AI powered features coming your way across a range of key services in Google’s Workspace, apps, and Cloud.

“As an AI-first company, we’re at an exciting inflection point…We’ve been applying AI to our products to make them radically more helpful for a while. With generative AI, we’re taking the next step,” Sundar Pichai, CEO of Google and Alphabet, said in the keynote. “We are reimagining all our core products, including search.”

Here’s a look at what’s coming down the AI-created road.

Users will soon be able to work alongside generative AI to edit their photos, create images for their Slides , analyze data in Sheets , craft emails in Gmail , make backgrounds in Meet, and even get writing assistance in Docs . It’s also applying AI to help translations by matching lip movements with words, so that a person speaking in English could have their words translated into Spanish—with their lip movements tweaked to match. To help users discern what content generative AI has touched, the company said that it’s working on creating special watermarks and metadata notes for synthetic images as part of its responsible AI effort.

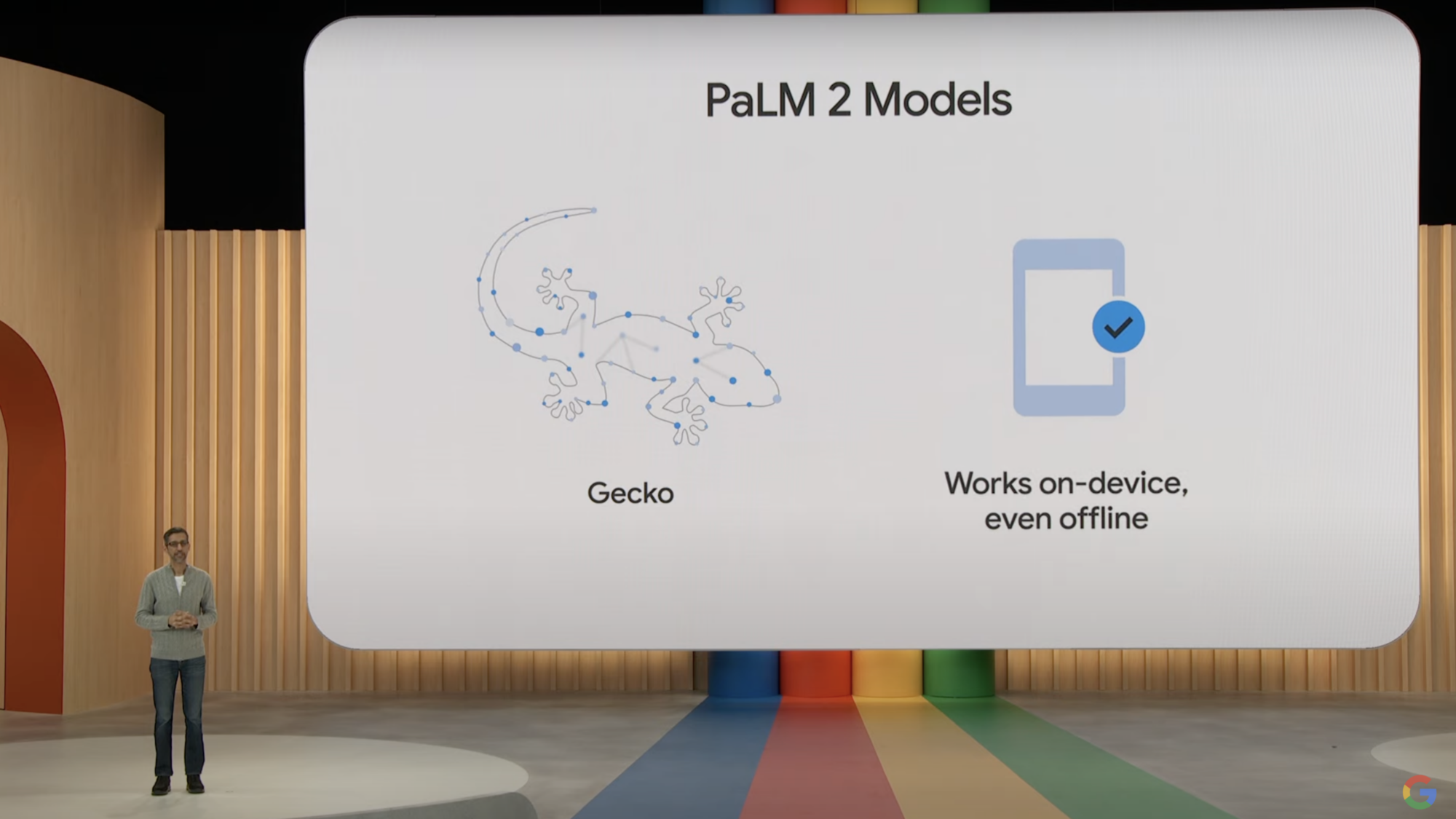

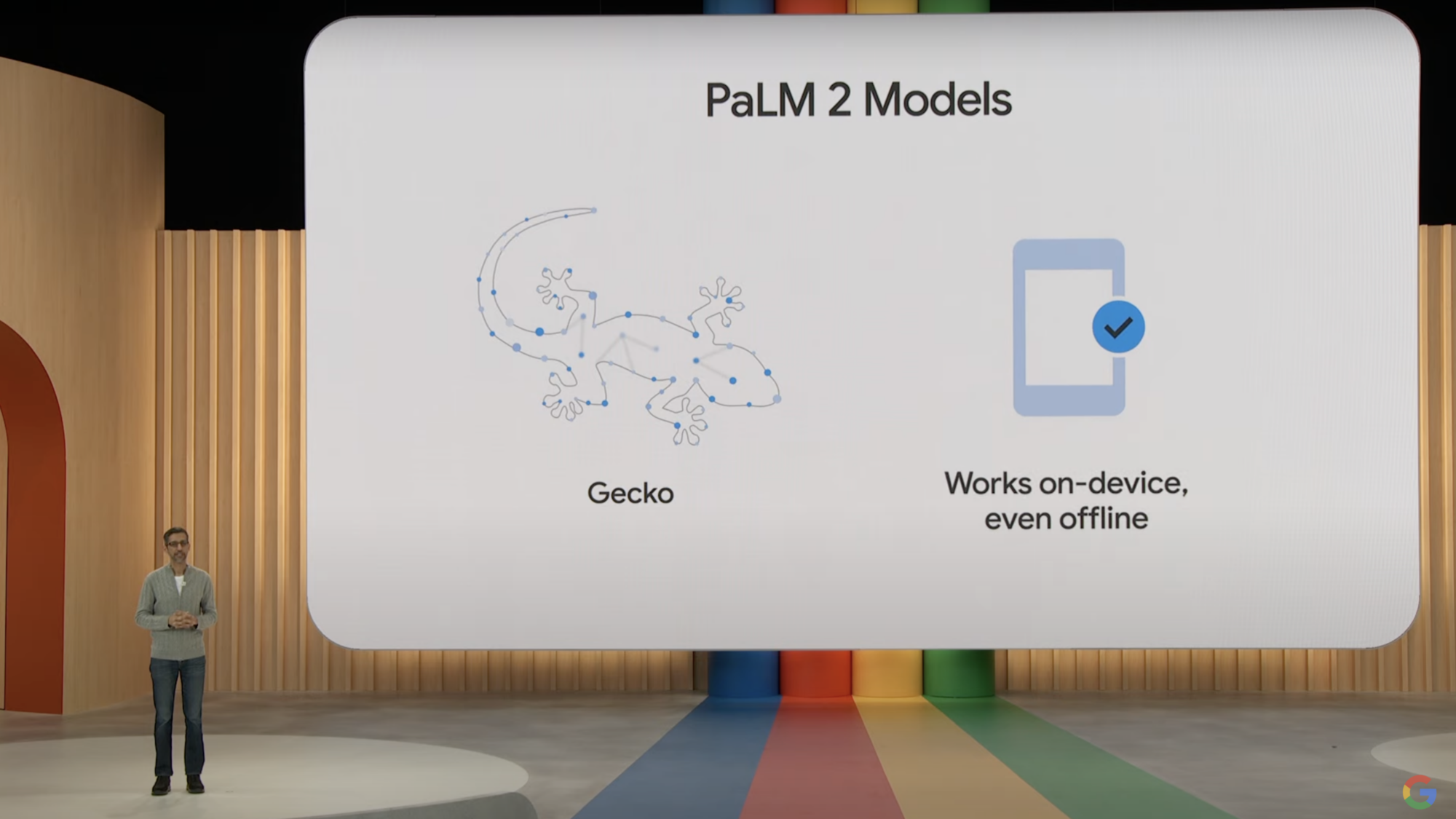

The foundation of most of Google’s new announcements is the unveiling of its upgrade to a language model called PaLM, which has previously been used to answer medical questions typically posed to human physicians. PaLM 2, the next iteration of this model, promises to be faster and more efficient than its predecessor. It also comes in four sizes, from small to large, called Gecko, Otter, Bison, and Unicorn. The most lightweight model, Gecko, could be a good fit to use for mobile devices and offline modes. Google is currently testing this model on the latest phones.

[Related: Google’s AI has a long way to go before writing the next great novel]

PaLM 2 is more multilingual and better at reasoning too, according to Google. The company says that a lot of scientific papers and math expressions have been thrown into its training dataset to help it with logic and common sense. And it can tackle more nuanced text like idioms, poems, and riddles. PaLM 2 is being applied to medicine, cybersecurity analysis, and more. At the moment, it also powers 25 Google products behind the scenes.

“PaLM 2’s models shine when fine-tuned on domain-specific data. (BTW, fine tuning = training an AI model on examples specific to the task you want it to be good at,)” Google said in a tweet.

A big reveal is that Google is now making its chatbot, Bard, available to the general public. It will be accessible in over 180 countries, and will soon support over 40 different languages. Bard has been moved to the upgraded PaLM 2 language model, so it should carry over all the improvements in capabilities. To save information generated with Bard, Google will make it possible to export queries and responses issued through the chatbot to Google Docs or Gmail. And if you’re a developer using Bard for code, you can export your work to Replit.

In essence, the theme of today’s keynote was clear: AI is helping to do everything, and it’s getting increasingly good at creating text, images, and handling complex queries, like helping someone interested in video games find colleges in a specific state that might have a major they’re interested in pursuing. But like Google search, Bard is constantly evolving and becoming more multimodal. At Google, they aim to soon make Bard include images in its responses and prompts through Google Lens. The company is actively working on integrating Bard with external applications like Adobe as well as a wide variety of tools, services, and extensions.