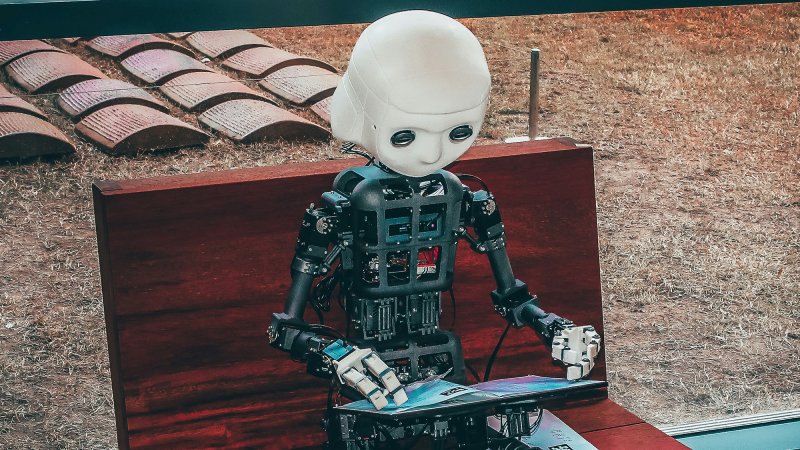

For years, science fiction authors and real-world robotics research alike have dreamed of a future where dozens, or even hundreds of tiny, bug-sized drones work in tandem to autonomously survey vast areas. But there’s a problem. These handheld, lightweight machines simply lack the processing power and carrying capacity that let driverless cars and other larger robotic cousins navigate their world independently. Researchers from the Delft University of Technology (TU Delft) in the Netherlands now believe they may have now found a solution to that dilemma, and it starts with the humble ant.

Ants, and insects in general, have long fascinated biologists due to their ability to embark on long journeys to forage for food and resources and intuitively know how to make their way home. Desert ants inhabiting the Sahara can travel more than two kilometers away from their nests without getting lost. Honeybees can commute nearly twice as far and with brains smaller than a grain of rice. Researchers theorize ants specifically may navigate by counting their steps and utilizing a “snapshot model” where they essentially take mental photographs of the environment around them. Later, when traveling, the insects try to match visuals around them with the stored snapshot until they match up. The result is a remarkably effective means of navigation using minimal memory and brain power.

Writing on July 17 in Science Robotics, TU Delft researchers wanted to see if they could take those observations from the insect world and apply them to a 56-gram flying drone equipped with a tiny camera and a cheap processor. The researchers created an indoor obstacle course and several snapshots images for the drone to reference. Snapshots were placed with as much distance between them as possible to ensure reduced the amount of total images the drone had to store. That, in turn, means the drone required less memory capacity.

Once deployed, the minimalist drones were able to home-in on a memory-stored snapshot and move from point to point through the course like a chain link before eventually returning to its home base. In the end, the drone was able to successfully travel 100 meters around the course using just 0.65 kiloBytes of memory. All of the visual computing needed for the insect-inspired robot to complete the course occurred on a common microcontroller often found in cheap consumer electronics.

“The experiments show that even tiny robots can navigate autonomously,” the researchers wrote.

Delt University of Technology professor and paper author Tom van Dijk compared the snapshot process to the fairy tale of Hansel and Gretel, where Hansel drops breadcrumbs and stones to help the pair find their way home.

“When Hansel threw stones on the ground, he could get back home. However, when he threw bread crumbs that were eaten by the birds, Hans and Gretel got lost,” van Dijk said in a statement. “In our case, the stones are the snapshots.”

“As with a stone, for a snapshot to work, the robot has to be close enough to the snapshot location,” Van Dijk added. “If the visual surroundings get too different from that at the snapshot location, the robot may move in the wrong direction and never get back anymore.”

Bug-inspired drones could deploy in remote, inaccessible areas

The snapshot experiments could one day help swarms of small drones navigate autonomously without heavy, complex sensors or external infrastructure like GPS. That simplicity could come in handy in dense urban areas or remote remote caves where GPS functionality may not be an option. Self-navigating swarms of tiny camera-equipped drones could then be used to monitor crops for early signs of infections, stock tracking in warehouses, or even squeeze into small areas to aid in search and rescue operations. Military forces around, including the US, are also already testing ways to deploy drone swarms of varying sizes on the battlefield. And while their petite size prevents them from equipping lidar or other powerful 3D-mapping sensors found on some larger robots, the TU Delft researchers argue that amount of power might be overkill for many use cases.

“For many applications this may be more than enough,” Delt University of Technology professor in bio-inspired drones and paper co-author Guido de Croon said.

“The proposed insect-inspired navigation strategy is an important step on the way to applying tiny autonomous robots in the real world,” he added.

Animal-inspired robots are everywhere

The snapshot experiment adds to the growing inventory of robots and drones that draw influence from animals. Robotosits have already created machines inspired by the movements of dogs, cat, mice, birds, and even a tuna. And despite continuous research into the natural world, mysteries still remain about exactly how animals think and perform tasks that the average observer might take for granted. It’s likely further insight into animal behavior will continue to influence emerging robotics.