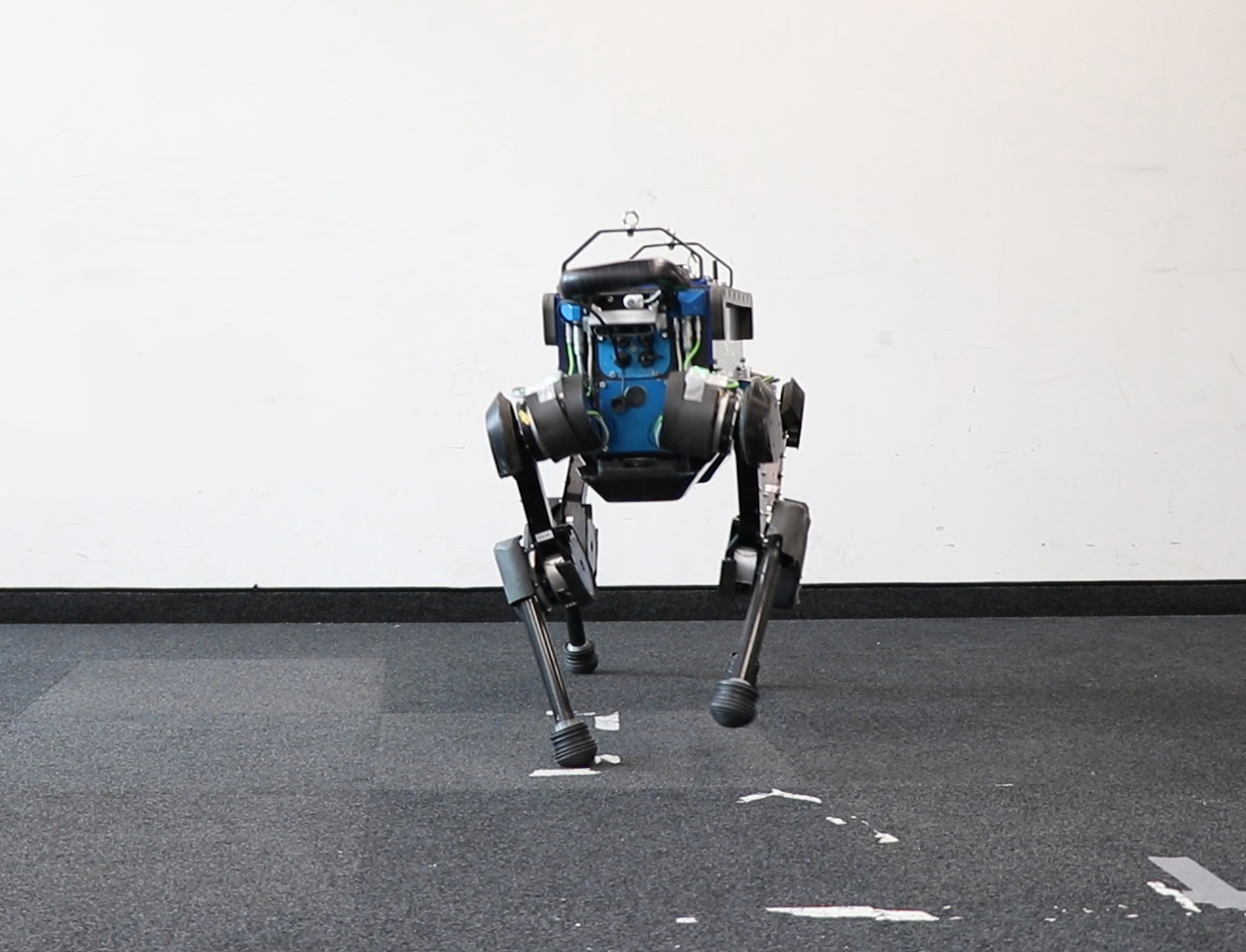

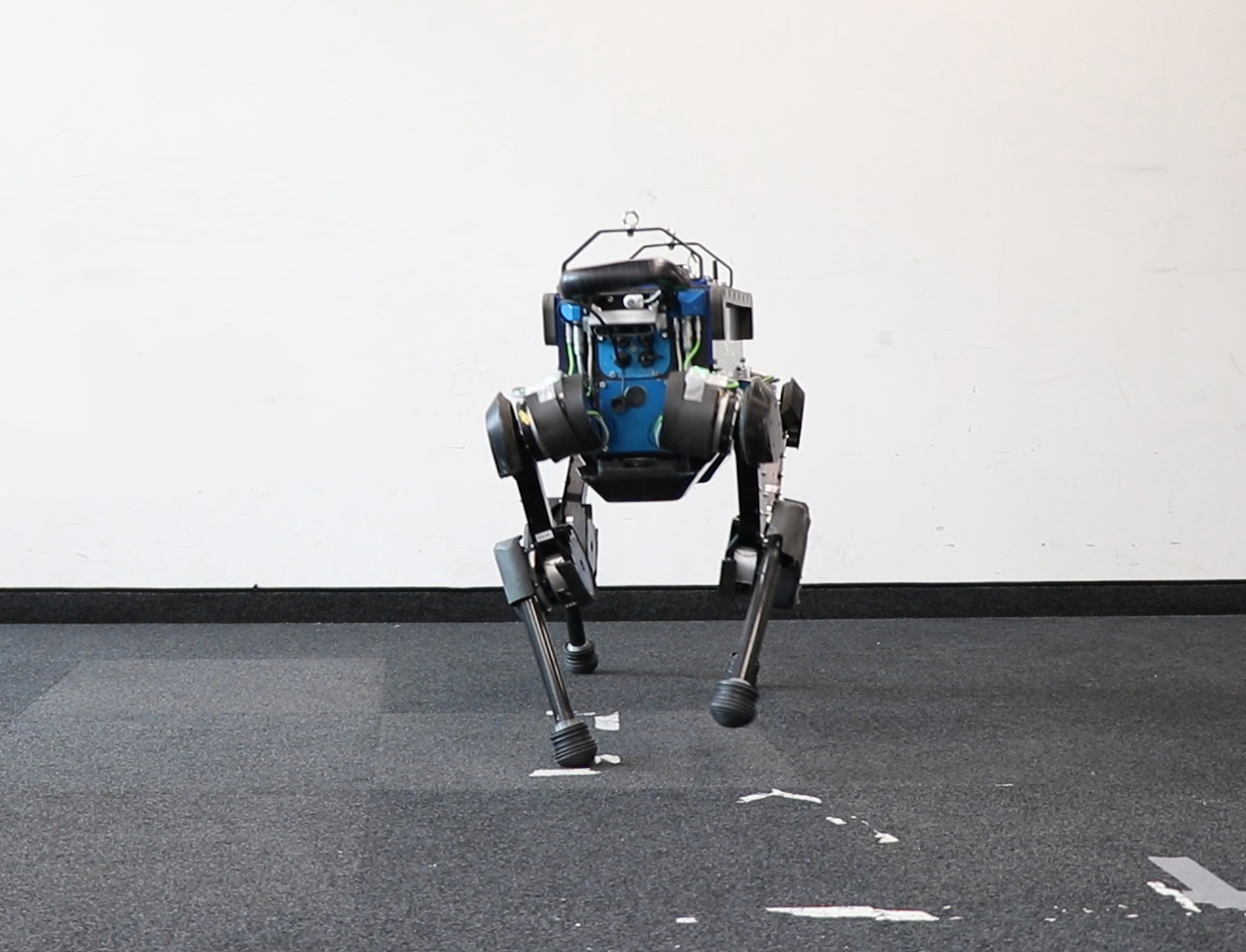

Meet ANYmal—a four-legged robot whose name is pronounced “animal.” The 73-pound dog-like machine is a Swiss-made contraption that, thanks to artificial intelligence, can run faster, operate with more efficiency, and reset itself after a spill more successfully than it could before its AI training.

The robot, featured in a new study in the journal Science Robotics, learned not just with AI, but also through computer simulation on a desktop, a much faster approach than teaching a robotic new tricks in the real, physical world. In fact, simulation is roughly 1,000-times faster than the real world, according to the study.

This isn’t the only arena for which simulation is important: In the world of self-driving cars, time in simulation is a crucial way that companies test and refine the software that operates the vehicles. In this case, the researchers used a similar strategy, just with a robot dog.

To ensure that the simulator in which the virtual dog learned its skills was accurate, the researchers first made sure to incorporate data about how the robot behaves in the real world. Then, in simulation, a neural network—a type of machine learning tool—learned how to control the robot.

Besides the speed benefits of simulation, the technique allowed the researchers to do things to the robot that they wouldn’t want to do in real life. For example, they could virtually throw the breakable robo-dog in the air in the simulation, says Jemin Hwangbo, the lead researcher on the project and a postdoctoral fellow at the Robotic Systems Lab in Zurich, Switzerland. Then the pup could figure out how to stand back up after it landed.

After the neural network had finished its training in simulation, the team was able to deploy that learning onto the physical robot itself—which stands over 2 feet tall, has 12 joints, is electrically-powered, and looks similar to a robot called SpotMini made by Boston Dynamics.

The final result, after the sim time and AI, was that the robo pooch could follow instructions more precisely—for example, if commanded to walk at 1.1 mph, it could do that more precisely than before, according to Hwangbo; it also was able to get up successfully after a fall, and run faster. Programming a complex robot like ANYmal with specific instructions on how to get up after a fall is complicated, while letting it learn how to do it in simulation is a much more robust approach.

Chris Atkeson, a professor in both the Robotics Institute and Human-Computer Interaction Institute at Carnegie Mellon University, said that the method that Hwangbo and his team used is a time- and money-saver when it comes to getting a robot to do what you want it to do.

“They made robot programming cheaper,” he says. “Programming is very expensive, and robot programming is really expensive, because you basically have to have robot whisperers.” That’s because the people who program robots need to be both good at coding, and good at making the robot’s mechanics perform properly.

But with Hwangbo and his team, their robot was able to learn in simulation, as opposed to programers carefully coding each action. It’s “a big step towards automating that kind of stuff,” Atkeson says.

As for the fact that a video from Robotic Systems Lab shows the robot being kicked, presumably to test how robust it is? “When this robot gets super intelligent, it’s going to get pissed off,” Atkeson jokes. “There’s the video record of them kicking it—so they’re going to be the first to go when the robot revolution comes.”