You know that little voice in your head, the one that says,”Maybe jumping off this cliff isn’t a good idea,” or, “You can’t drink a whole gallon of milk?”

That murmur is self-doubt, and its presence helps keep us alive. But robots don’t have this instinct—just look at the DARPA Robotics Challenge. But for robots and drones to exist in the real world, they need to realize their limits. We can’t have a robot flailing around in the darkness, or trying to bust through walls. In a new paper, researchers at Carnegie Mellon are working on giving robots introspection, or a sense of self-doubt. By predicting the likelihood of their own failure through artificial intelligence, robots could become a lot more thoughtful, and safer as well.

To gauge whether an action will fail or not, the key is prediction. As humans, we predict outcomes naturally, by imagining everything that could go wrong after making a decision. In the case of deciding a drone’s flight path, an algorithm would have to look at the video coming from the drone’s camera, and predict based on the objects in the picture if its next action will cause a crash. The drone would have to imagine the future.

This idea goes beyond object detection, a feature found in some drones now. That detection is simple: if there’s something in the way, don’t go there. But this framework would allow the A.I. to actually determine if the scenario could be detrimental to its primary mission. That includes weather conditions that block the drone’s sensors, or a lack of light needed for a its cameras. It’s understanding a potential threat, rather than reacting to it.

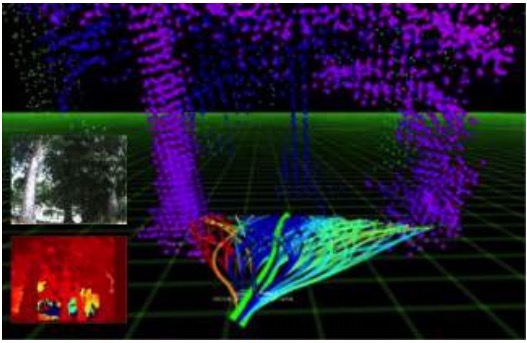

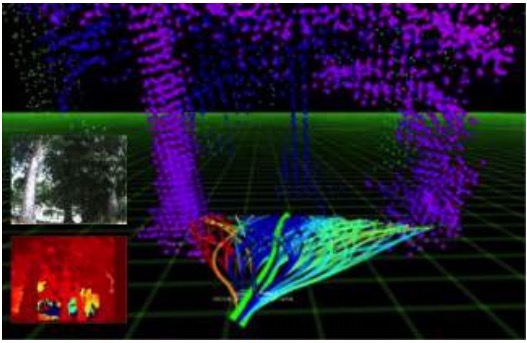

In Carnegie Mellon’s tests, researchers set a modified drone to fly autonomously through trees in a park. By predicting the drone’s trajectory and the likelihood of it crashing if it took that course, it flew more than twice as long as attempts without introspection—more than 1000 meters weaving through obstacles.

But still, this work is preliminary, and not alone. Microsoft Research’s Eric Horvitz has been working on this idea for years, focusing on making many decision-making algorithms work together.

“We’re developing active systems that reflect about many many things at once that can think together and coordinate elegantly many competencies and functions, so that they operate more or less like a well-practiced symphony,” Horvitz said.

One of these scenarios reenacted a game show, but with an A.I. host and two human competitors. The A.I. would pose questions, and the humans would answer. But to maintain a proper conversation—like asking if the contestant needed more time—the A.I. would calculate to see how long it would take to compute a response, and check that against how long before a human would probably speak. If it would take too long, the robot would stay silent.

“Adding that layer was beautiful, it made the system much more elegant,” Horvitz said.

If there’s a path to self-awareness in robots, it’s through teaching introspection. It’s instilling a crude imperative to survive, a thought that might make some futurists shudder. But at least for now, these robots can barely dodge trees—so humans will be safe for another few years at least.