This has been a good week for artificial intelligence: On Monday, Google publicly released TensorFlow, a powerful machine learning platform that researchers can use to create their own artificial intelligence programs. On Tuesday Nvidia announced a sizable update to its supercomputer-on-a-chip, the Jetson TX1. Now Microsoft has updated its own suite of artificial intelligence tools, called Project Oxford, with a series of powerful new features that could soon find their way in apps used by all of us, including a program that identifies human emotion, and another that can identify individual human voices in a noisy room.

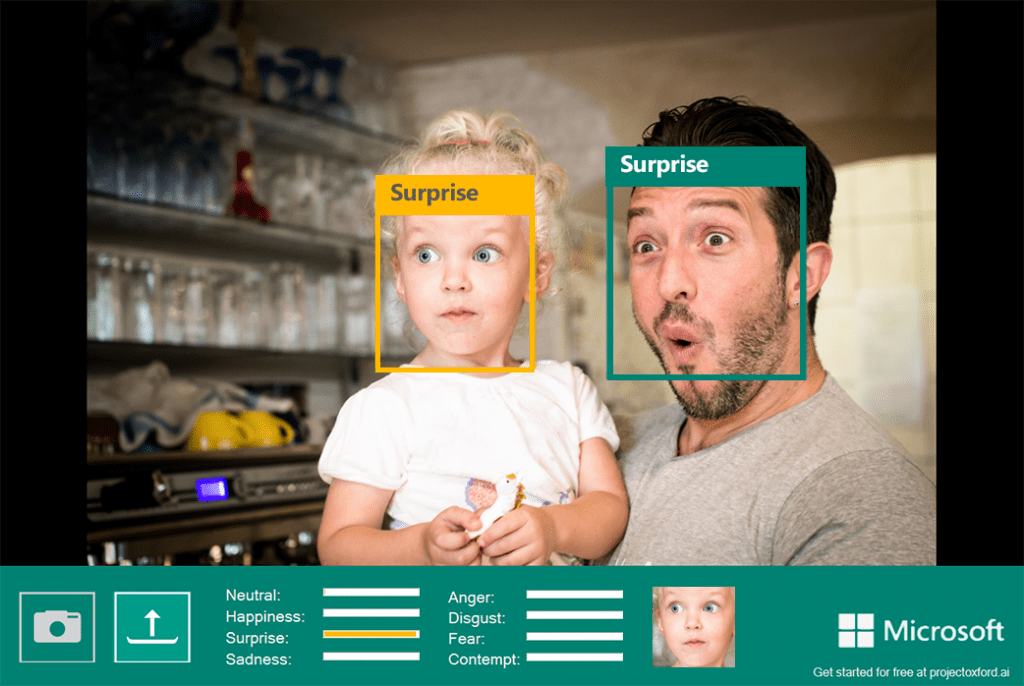

The big new features include a series of new application programming interfaces (APIs), which are tools that software developers outside Microsoft can use to build their own independent apps. Microsoft mainly hyped its Emotion API, which uses machine learning to recognize eight states of emotion (anger, contempt, fear, disgust, happiness, neutral, sadness, or surprise), based on facial expressions. (It’s a lot like the work of Paul Ekman, more popularly made into the defunct TV show “Lie To Me.”)

The Emotion API is available today, and was debuted earlier in the week in MyMoustache, a moustache-identifying web application for the Movember charity.

Microsoft says that this could be useful in gauging customers’ reactions to products in stores, and even a reactive messaging app.

Speaking of messaging, Microsoft is also releasing a spell checking API, which improves on standard, rules-based checkers. The software can tell the difference between “for” and “four” in a sentence, and learn popular expressions as people use them (“selfie”). Microsoft hasn’t really clarified yet just how all this data is stored and managed with regards to user privacy.

This is similar to Facebook’s machine learning in translation: the company scrapes users’ Facebook posts for new phrases and slang words to be incorporated into Facebook’s translators at least monthly, and is in the process of increasing the frequency of that training.

For the field of computer vision, Microsoft is also releasing a Video API, which detects shaky camera movement, motion, and faces. The software can be implemented to edit video automatically, like a few action cameras currently do, and its also used in Microsoft Hyperlapse. It will be available (in beta) by the end of the year.

Covering most bases, Microsoft is also putting forward two APIs that make it easier to understand people who are speaking: one geared towards identifying a speaker, and the other that can recognize what people are saying through the din of a crowded room, or those with accents or trouble speaking. The one that cuts through the noise is called Custom Recognition Intelligent Services, and will be available on an invite-only basis at the end of the year.

Accents and loud environments have been traditionally tricky for artificial intelligence systems to recognize, but researchers are now finding ways around that. For instance, Google’s Voice Search developers actually add noise to the samples that the A.I. listens to when its learning different phrases, so it is better suited for real-world environments.

While Google’s TensorFlow platform is targeted towards the researcher and programmer, Microsoft is aiming for the app developer with focused, easy APIs. With easier tools like this, more mainstream apps that aren’t backed by multi-billion dollar companies will be able to integrate deep learning and artificial intelligence into their apps. A.I. is the next software frontier, and Microsoft just made it a whole lot easier for smaller developers to get involved.