If you give a computer enough photos and the right algorithm, it can learn to see. And if the photos show damaged eyes, the computer can learn to diagnose eye disease even better than humans can.

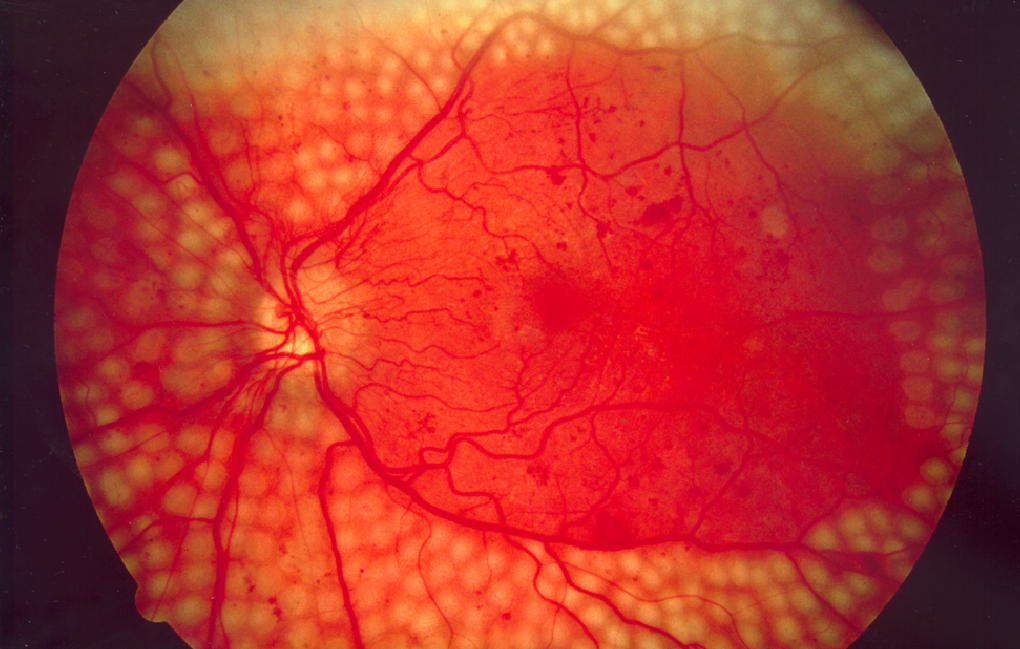

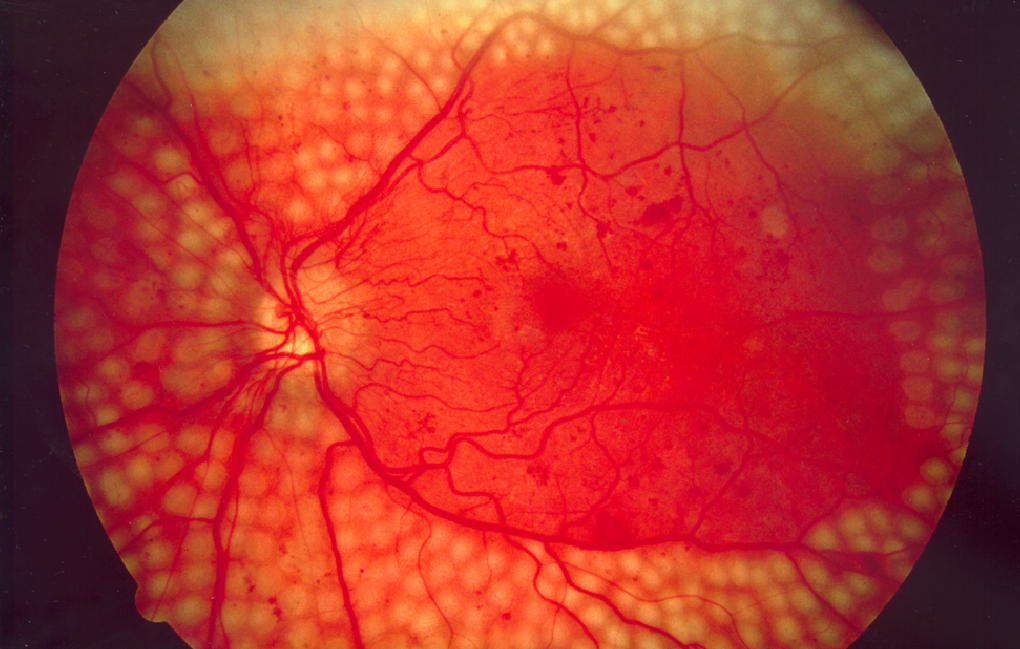

People with diabetes frequently suffer from a condition called diabetic retinopathy, where the tiny blood vessels at the back of their eyes (the retina) become damaged and start to leak. About one in three diabetics have this kind of damage, and if left untreated it can cause permanent blindness. With early detection, though, it’s quite treatable.

The problem is that many people don’t have access to an ophthalmologist who can diagnose them. There are 387 million diabetics worldwide who need to see specialists in order to catch the disease early, and our current prevention isn’t working well enough — diabetic retinopathy is the leading cause of vision impairment and blindness in the working-age population.

So Google devised a way to use deep machine learning to teach a neural network how to detect diabetic retinopathy from photos of patients’ eyes. They published their work in the Journal of the American Medical Association on Tuesday.

A neural network is kind of like an artificial brain, albeit a simple one. By showing it a huge set of images of patients with and without retina damage, engineers can train the network to distinguish between diseased and non-diseased eyes. After the training, the Google team tested the neural network to see if the algorithm could detect diabetic retinopathy as well as ophthalmologists, who had seen the same images.

Google’s algorithm performed slightly better than the human ophthalmologists, which suggests that the neural network could help screen patients in the future, or at least assist doctors in the diagnosis process.

Doctors already use a similar kind of technology to help diagnose diseases like heart disease and some kinds of cancer. The current technology isn’t as advanced as Google’s new deep learning algorithm, but it’s based on the same principle. Doctors identify issues like artery blockage in heart disease and abnormal growths in cancer by looking at images of your body, whether from an x-ray or a CT scan. A specialist in these kind of images—a radiologist—has years of experience of looking at photos and picking out problematic areas.

But human vision is only so good, and people are prone to making mistakes. If a computer could do the same thing, it would probably be able to surpass a human’s ability to find cancerous growths or blocked arteries. The logical solution is to teach a computer what an irregular image looks like versus a regular image. That might seem simple—understanding an image is easy enough for people, after all.

The trouble is that understanding images is more difficult for computers than it is for a human brain. If you show the picture above to a computer, all it really sees is a series of pixels with certain colors assigned to them. You, on the other hand, see a beach and a woman. You can identify her sunglasses and hat. You know that she’s jumping and wearing a green bikini with little white flowers, and that it’s overcast. A computer doesn’t know any of that, unless it has computer vision.

Computer vision is a way of teaching computers how to “see,” to look at that image and know that a person is lying down on a beach wearing purple swim trunks. The way that computers currently help diagnose patients is a basic form of computer vision, but it can only assist with the process—it isn’t good enough to replace a set of human eyes.

Google has the potential to change that. They’re already great at computer vision, in part because they have an immense amount of data. You can see for yourself how well it already works, because Google uses their computer vision technology to organizing your personal pictures. If you go to Google Photos right now (assuming that you have a Google account, which you probably do), you can see all the photos that the system has catalogued and search by term. Try “pictures of snow” or, better yet, “pictures of dogs.” Pictures of snow and dogs will show up, not because anyone tagged those photos with text, but because Google’s computer vision algorithm has identified snow and dogs in those images.

Diabetic retinopathy is one of the first diagnostic applications that Google has found for it’s deep learning computer vision team. Other teams are already working on similar projects. Cornell University has a Vision and Image Analysis Group that’s working on using computer vision to diagnose lung diseases, heart problems, and bone health issues. A Finnish group is working on how to diagnose malaria from images of blood, and IBM has spent years developing an algorithm to detect skin cancer.

One day, computer vision and deep learning could change the way doctors diagnose patients. But for now the FDA hasn’t signed off on using this kind of technology in medicine. If this is the way of the future, they’ll have to figure out how to regulate neural networks safely. In the meantime, you can use Google’s computer vision capabilities to find pictures of your dog and turn them into nightmares. Not as useful, perhaps, but a decent way to pass the time.